AXIOM Beta/AXIOM Beta Software

Note: Some of the instructions we have prepared are written in a way they can also be followed by people without advanced technical knowledge. If you are more of a "techie", please keep this in mind and skip or ignore the steps or passages which deal with information you already know.

1 Getting Started

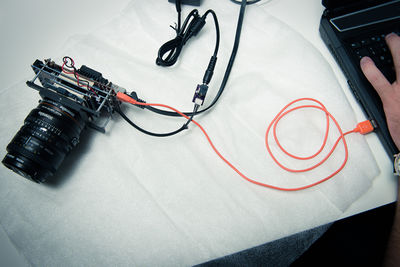

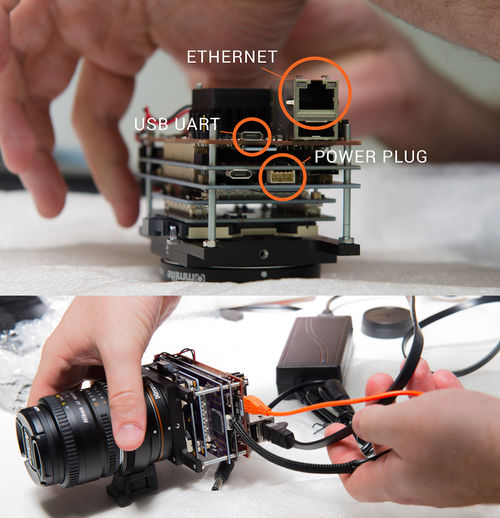

1.1 Prep your AXIOM Beta camera for use

- Use a micro-USB cable to connect the camera's MicroZed development board (USB UART) to a computer. The MicroZed board is the backmost, red PCB. (There is another micro-USB socket on the Power Board, but that is the JTAG Interface.)

- Connect the ethernet port on the MicroZed to an ethernet port on your computer. You might have to use an ethernet adapter on newer, smaller machines which come without a native ethernet port.

- Connect the AC adapter to the camera's Power Board. (The power cord plugs into an adapter that connects to the Power Board; to power the camera off at a later point, you need not disconnect the adapter from the board but can just unplug the cord from the adapter.)

1.2 Prep your computer for use with your camera

To communicate with your AXIOM Beta camera, you will send it instructions via your computer's command line.

In case you have not worked with a shell (console, terminal) much or ever before, we have prepared detailed instructions to help you get you set up. The steps which need to be taken to prepare your machine sometimes differ between operating systems, so pick the ones that are applicable to you(r system).

Note that dollar signs $ placed in front of commands are not meant to be typed in but denote the command line prompt (a signal indicating the computer is ready for user input). It is used in documentation to differentiate between commands and output resulting from commands. The prompt might look different on your machine (e.g. an angled bracket >) and be preceded by your user name, computer name or the name of the directory which you are currently inside.

1.2.1 USB to UART drivers

For the USB connection to work, you will need drivers for bridging USB to UART (USB to serial). (Under Linux this works out of the box in most distributions) for other operating systems they can be downloaded from e.g. Silicon Labs' website – pick the software provided for your OS and install it.

1.2.2 Serial console

The tool we recommend for connecting to the AXIOM Beta camera via serial port with Mac OS X or Linux is minicom; for connections from Windows machines, we have used Putty.

/Mac OS X setup/

/Linux setup/

/minicom configuration/ instructions (Mac and Linux)

1.3 Serial connection (via USB)

While the AXIOM Beta can be connected to via USB UART (USB to serial), a serial connection is not the preferred way to communicate with the camera but rather in place for monitoring purposes.

However, a serial connection is needed to set up communication via ethernet/LAN (the better suited way to talk to the camera): as the Beta only allows for secure ethernet connections, you will have to connect to the camera via serial port first and copy over your SSH key.

Below are two different methods for connecting to the AXIOM Beta camera, using a program called minicom and an alternative program called screen. We suggest you try them in the order below, so if you can connect with minicom great, if not try screen.

1.3.1 Connect using minicom

Note that you will not be able to use the terminal window you initiate the serial connection in for anything else (it needs to remain open while you access the camera), so it might make sense to open a separate window just for this purpose.

With minicom installed and properly configured, all you need to do is run the following command to start it with the correct settings:

$ minicom -8 USB0

On successful connection, you will be prompted to enter user credentials (which are needed to log into the camera).

If your terminal remains blank except for the minicom welcome screen/information about your connection settings, try pressing enter. If this still does not result in the prompt for user credentials – while testing, we discovered the initial connection with minicom does not always work – disconnect the camera from the power adapter, then reconnect it: in your minicom window you should now see the camera's operating system booting up, followed by the login prompt. (From then on, connecting with minicom should work smoothly and at most require you to press enter to make the login prompt appear.)

The default credentials are:

user: root password: beta

1.3.2 Alternative tools for serial connection

Before you can use any tool to initiate a serial connection with your Beta camera, you need to know through which special device file it can be accessed.

Once the Beta is connected and powered on (and you installed the necessary drivers), it gets listed as a USB device in the /dev directory of your file system, e.g.

/dev/ttyUSB0 (on Linux)

or

/dev/cu.SLAB_USBtoUART

/dev/tty.SLAB_USBtoUART (on Mac).

You can use a command such as:

$ ls -al /dev | grep -i usb

to list all USB devices currently connected to your machine.

1.3.2.1 screen

To connect to the camera, use the command:

$ screen file_path 115200

where file_path is the full path to the special device file (e.g. /dev/ttyUSB0 or /dev/cu.SLAB_USBtoUART).

You might have to run the command with superuser rights, i.e.:

$ sudo screen file_path 115200

On successful connection, you will be prompted to enter user credentials needed for logging into the camera.

If your terminal remains blank, try pressing enter.

The default credentials are:

user: root password: beta

1.3.3 Disconnect

To exit the camera's operating system, use:

$ exit

The result will be a logout message followed by a new login prompt.

To suspend or quit your screen session (and return to your regular terminal window) use one of the following commands:

CTRL+a CTRL+z

CTRL+a CTRL+\

1.4 Ethernet connection (using SSH)

To access the AXIOM Beta via Ethernet – which is the preferred way to communicate with it and will also work remotely, from a machine which is not directly connected to the camera – authentication via SSH is required.

If you have never created SSH keys before or need a refresher in order to create a new pair, see the How-to below.

1.4.1 Get/set IP address

If your network has a DHCP server running somewhere (eg. router) the AXIOM Beta will receive an IP address automatically as soon as it is connected the network.

Otherwise, you will have to set the Beta's IP address manually with the ifconfig command over the serial console (USB).

1.4.1.1 IP address check

While connected to the AXIOM Beta via USB, you can use the command:

$ ip a l

on the Beta to check whether it is currently assigned an IP address. Find the entry which begins with eth0 and check if it contains a line starting with inet followed by an IP address. If it does, this is the IP address the Beta can be reached at.

Example output with no IP address assigned:

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0a:35:00:01:26 brd ff:ff:ff:ff:ff:ff

inet6 fe80::20a:35ff:fe00:126/64 scope link

valid_lft forever preferred_lft forever

1.4.1.2 Set IP address

If the IP address check is not successful – in that it does not produce an IP address you can connect to – you will have to set your Beta's IP address manually with the ifconfig command.

While connected to the Beta, you can do that like so:

$ ifconfig eth0 192.168.0.9/24 up

It does not really matter which IP address you choose as long as it is one allowed for private use (e.g. addresses in the 192.168.x.x range) and you make sure to use the /24 prefix.

If you now use $ ip a l (again) to check for the camera's IP address, you should see the address you assigned listed after inet, e.g.:

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0a:35:00:01:26 brd ff:ff:ff:ff:ff:ff

inet 192.168.0.9/24 brd 192.168.0.255 scope global dynamic eth0

valid_lft 172739sec preferred_lft 172739sec

inet6 fe80::20a:35ff:fe00:126/64 scope link

valid_lft forever preferred_lft forever

1.4.2 Establish a connection

Now we have the network configured we need to copy over our SSH key to the Beta.

While connected to the AXIOM Beta via USB, you can use the command:

cd ~/.ssh/

cp authorized_keys authorized_keys.orig

Strictly speaking the cp (copy) command isn't needed, but it's best practice to always make a copy of a file before editing it - just in case.

The next command will be to add your SSH key to the SSH file which contains the information on who can log into it via SSH without a password.

You will need to first copy (control/command c) - IMPORTANT, make sure that you copy the whole key but do not include the next/new line.

Next, with the command below, you will add your key to the authorized_keys file. The structure of the command below is:

- echo - writes the text after it

- [your key] - pastes (control/command v) your key, so do not type in [your key]!

- > - overwrites the existing file and adds just this text

- authorized_keys - make sure to spell this correctly.

As a heads-up when you paste in your SSH key the terminal window may wrap the text in a way that it looks like a bit of a mess - this is normal and you can then carry on typing in the rest of the command.

echo [yourkey] >> authorized_keys

Now you have added you key you can then try and ssh into the Beta.

On your computer use the following command - note the IP address will be the one you set earlier, the one used below is just the example IP.

ssh root@192.168.0.9

You should now see the following prompt if you have logged on successfully to the Beta.

Last login: Fri Jun 10 15:12:17 2016

sourcing .bashrc ...

[root@beta ~]#

Congratulations, you have now set up the Beta's networking and SSH configuration.

The minicom or screen method you used to connect is no longer needed.

2 Start the camera

As development of the Beta continues the camera will initialize all systems and train the sensor communication automatically when powered on, but for now you will have to manually start this yourself.

Run the following command:

./kick_manual.sh

You will see a lot of output, the tail of it is below.

.. mapped 0x8030C000+0x00004000 to 0x8030C000. mapped 0x80000000+0x00400000 to 0xA6961000. mapped 0x18000000+0x08000000 to 0x18000000. read buffer = 0x18390200 selecting RFW [bus A] ... found MXO2-1200HC [00000001001010111010000001000011] [root@beta ~]#

If you look at the back of the camera you will now see a blue LED near the top flashing very fast - this indicates everything is now running.

If you turn the camera off when you turn it back on you will need to re-run this command.

3 Capture an image

write the image into file: snap.raw16 plus display it with imagemagick:

ssh root@*BETA-IP* "./cmv_snap3 -e 10ms" | tee snap.raw16 | display -size 4096x3072 -depth 16 gray:-

same for 12bit (more efficient, same data):

ssh root@*BETA-IP* "./cmv_snap3 -2 -e 10ms" | tee snap.raw12 | display -size 4096x3072 -depth 12 gray:-

4 Overlay Images

clear overlay

./mimg -a -o -P 0

enable overlay:

gen_reg 11 0x0104F000

disable overlay:

gen_reg 11 0x0004F000

5 Addititonal outputs

Display voltages and current flow:

./pac1720_info.sh

Output:

ZED_5V 5.0781 V [2080] +29.0625 mV [2e8] +968.75 mA BETA_5V 5.1172 V [20c0] +26.6016 mV [2a9] +886.72 mA HDN 3.2422 V [14c0] -0.0391 mV [fff] -1.30 mA PCIE_N_V 3.2422 V [14c0] -0.0391 mV [fff] -1.30 mA HDS 3.2422 V [14c0] +0.0000 mV [000] +0.00 mA PCIE_S_V 3.2422 V [14c0] -0.0391 mV [fff] -1.30 mA RFW_V 3.2812 V [1500] +0.2734 mV [007] +9.11 mA IOW_V 3.2422 V [14c0] +0.0000 mV [000] +0.00 mA RFE_V 3.2812 V [1500] +0.2344 mV [006] +7.81 mA IOE_V 3.2812 V [1500] +0.0781 mV [002] +2.60 mA VCCO_35 2.5000 V [1000] +0.6641 mV [011] +22.14 mA VCCO_13 2.4609 V [ fc0] +1.2500 mV [020] +41.67 mA PCIE_IO 2.4609 V [ fc0] -0.0391 mV [fff] -1.30 mA VCCO_34 2.4609 V [ fc0] +0.8203 mV [015] +27.34 mA W_VW 1.9922 V [ cc0] -0.0781 mV [ffe] -2.60 mA N_VW 3.1641 V [1440] +0.0000 mV [000] +0.00 mA N_VN 1.8750 V [ c00] +15.4297 mV [18b] +514.32 mA N_VE 3.1641 V [1440] +0.0000 mV [000] +0.00 mA E_VE 1.9922 V [ cc0] -0.0391 mV [fff] -1.30 mA S_VE 1.9531 V [ c80] +0.0000 mV [000] +0.00 mA S_VS 2.9297 V [12c0] +0.3906 mV [00a] +13.02 mA S_VW 1.9922 V [ cc0] -0.1562 mV [ffc] -5.21 mA

Read Temperature on Zynq:

./zynq_info.sh

Output:

ZYNQ Temp 49.9545 °C

6 HDMI Modes

cmv_hdmi3.bit is the FPGA bitstream loaded for the HDMI interface. We use symlinks to switch this file easily.

6.1 Enable 1080p60 Mode

rm -f cmv_hdmi3.bit ln -s cmv_hdmi3_60.bit cmv_hdmi3.bit sync reboot now

6.2 Enable 1080p30 Mode

rm -f cmv_hdmi3.bit ln -s cmv_hdmi3_30.bit cmv_hdmi3.bit sync reboot now

7 Tools

7.1 cmv_reg

Get and Set CMV12000 image sensor registers (CMV12000 sports 128x16 Bit registers).

Details are in the sensor datasheet: https://github.com/apertus-open-source-cinema/beta-hardware/tree/master/Datasheets

Examples:

Read register 115 (which contains the analog gain settings):

cmv_reg 115

Return value:

0x00

Means we are currently operating at analog gain x1 = unity gain

Set register 115 to gain x2:

cmv_reg 115 1

7.2 set_gain.sh

Set gain and related settings (ADC range and offsets).

./set_gain.sh 1 ./set_gain.sh 2 ./set_gain.sh 3/3 # almost the same as gain 1 ./set_gain.sh 3 ./set_gain.sh 4

7.3 cmv_snap3

Capture and store image snapshots and sequences.

Example:

ssh root@cameraip "./cmv_snap3 -B0x08000000 -x -N8 -L1024 -2 -e 5ms" >/tmp/test.seq12

-B setzt die buffer base und ist im augenblick erforderlich 0x08000000 bedeutet 128MB, ich hab das linux auf 128MB zusammengepfercht, damit bleibt 1024MB-128MB fuer sequenzen bitte beachten, es gibt kein sicherheitsnetz und keine plausibilitaetschecks, d.h. wenn du addressen erreichst oder spezifizierst wo das linux laeuft, dann ist vermutlich ein reset notwendig -x bedeutet das erste frame zu skippen (optional), da mir aufgefallen ist, dass das erste frame nach einer pause anders aussieht -N setzt die anzahl der frames die zu capturen sind (bitte wieder beachten, wenn du ueber 0x3FFFFFFF kommst, dann ueberschreibst du den linux bereich) -L setzt die anzahl der zeilen (nur gerade werte, max 3072) -2 stellt auf 12bit output (einziges format das ich getestet habe :) -e setzt die exposure wie gehabt

To split one seq12 file into multiple individual files:

split -b <size> <file.seq12> <prefix>

<size> the amount of bytes of one image (4096 x number-of-lines x 12bit / 8bit) <prefix> name optionally add --additional-suffix=.raw12 for the proper extension -d or --numeric-suffixes for numeric numbering of the split files

Example for 1024 lines (input file: test.seq12):

split -b 6291456 -d test.seq12 output --additional-suffix=.raw12

Example for 1080 lines (input file: test.seq12):

split -b 6635520 -d test.seq12 output --additional-suffix=.raw12

Use imagemagick to convert raw12 file into a color preview image:

cat test.raw12 | convert \( -size 4096x3072 -depth 12 gray:- \) \( -clone 0 -crop -1-1 \) \( -clone 0 -crop -1+0 \) \( -clone 0 -crop +0-1 \) -sample 2048x1536 \( -clone 2,3 -average \) -delete 2,3 -swap 0,1 +swap -combine test_color.png

Capture directly to DNG, without saving the raw12, in the camera:

./cmv_snap3 -2 -b -r -e 10ms | raw2dng snap.DNG

8 General Info

Stop HDMI live stream:

fil_reg 15 0

Start HDMI live stream:

fil_reg 15 0x01000100

8.1 Operating System

At this moment we were able to reuse an Arch Linux image for the Zedboard on the Microzed. To do so, some software such as the FSBL and uboot were added. More information can be found here: http://stefan.konink.de/contrib/apertus/ I will commit myself on the production of a screencast of the entire bootstrap proces from the Xilinx software to booting the MicroZed.

I would suggest running Arch Linux on the AXIOM Beta for development purposes. If we need to shrink it down that will be quite trivial. Obviously we can take the embedded approach from there, as long as we don't fall in the trap of libc implementations with broken threading.

8.1.1 Packet Manager Pacman

Update all package definitions and the database from the Internet:

pacman -Sy

Install lighttp webserver on the Beta:

pacman -S lighttpd

Install PHP on the Beta:

pacman -S php php-cgi

Follow these instructions: https://wiki.archlinux.org/index.php/lighttpd#PHP

Start the webserver:

systemctl start lighttpd

8.2 Moving Image Raw Recording/Processing

Note: This only works with the experimental raw mode enabled on the AXIOM Beta 1080p60 (A+B Frames) and is only tested with the Atomos Shogun currently.

To measure the required compensations with a different recorder follow this guide: this guide

Postprocessing software to recover the raw information (DNG sequences) is on github: https://github.com/apertus-open-source-cinema/misc-tools-utilities/tree/master/raw-via-hdmi

required packages: ffmpeg build-essentials

Mac requirements for compiling: gcc4.9(via homebrew):

brew install homebrew/versions/gcc49

also install ffmpeg

To do all the raw processing in one single command (after ffmpeg codec copy processing):

./hdmi4k INPUT.MOV - | ./raw2dng --fixrnt --pgm --black=120 frame%05d.dng

8.2.1 hdmi4k

Converts a video file recorded in AXIOM raw to a PGM image sequence and applies the darkframe (needs to be created beforehand).

Currently clips must go through ffmpeg before hdmi4k can read them:

ffmpeg -i CLIP.MOV -c:v copy OUTPUT.MOV

To cut out a video between IN and OUT with ffmpeg but maintaing the original encoding data:

ffmpeg -i CLIP.MOV -ss IN_SECONDS -t DURATION_SECONDS -c:v copy OUTPUT.MOV

hdmi4k HDMI RAW converter for Axiom BETA Usage: ./hdmi4k clip.mov raw2dng frame*.pgm [options] Calibration files: hdmi-darkframe-A.ppm, hdmi-darkframe-B.ppm: averaged dark frames from the HDMI recorder (even/odd frames) Options: - : Output PGM to stdout (can be piped to raw2dng) --3x3 : Use 3x3 filters to recover detail (default 5x5) --skip : Toggle skipping one frame (try if A/B autodetection fails) --swap : Swap A and B frames inside a frame pair (encoding bug?) --onlyA : Use data from A frames only (for bad takes) --onlyB : Use data from B frames only (for bad takes)

8.2.2 raw2dng

Converts a PGM image sequence to a DNG sequence.

DNG converter for Apertus .raw12 files

Usage:

./raw2dng input.raw12 [input2.raw12] [options]

cat input.raw12 | ./raw2dng output.dng [options]

Flat field correction:

- each gain requires two reference images (N=1,2,3,4):

- darkframe-xN.pgm will be subtracted (data is x8)

- gainframe-xN.pgm will be multiplied (1.0 = 16384)

- reference images are 16-bit PGM, in the current directory.

Options:

--black=%d : Set black level (default: autodetect)

- negative values allowed

--white=%d : Set white level (default: 4095)

- if too high, you may get pink highlights

- if too low, useful highlights may clip to white

--width=%d : Set image width (default: 4096)

--height=%d : Set image height

- default: autodetect from file size

- if input is stdin, default is 3072

--swap-lines : Swap lines in the raw data

- workaround for an old Beta bug

--hdmi : Assume the input is a memory dump

used for HDMI recording experiments

--lut : Linearize sensor response with per-channel LUTs

- probably correct only for one single camera :)

--fixpn : Fix pattern noise (slow)

--no-darkframe : Disable dark frame (if darkframe.pgm is present)

--no-gainframe : Disable gain frame (if gainframe.pgm is present)

Debug options:

--dump-regs : Dump sensor registers from the metadata block (no output DNG)

--fixpn-dbg-denoised: Pattern noise: show denoised image

--fixpn-dbg-noise : Pattern noise: show noise image (original - denoised)

--fixpn-dbg-mask : Pattern noise: show masked areas (edges and highlights)

--fixpn-dbg-row : Pattern noise: debug rows (default: columns)

Example:

./raw2dng --fixrnt --pgm --black=120 frame%05d.dng

8.2.2.1 Compiling raw2dng

Compiling raw2dng on a 64bit system requires the gcc-multilib package

Ubuntu:

sudo apt-get install gcc-multilib

8.2.3 EDL Parser

This script can take EDLs to reduce the raw conversion/processing to the essential frames that are actually used in an edit. This way a finished video edit can be converted to raw DNG sequences easily.

Requirements: ruby

puts "BEFORE EXECUTION, PLS FILL IN YOUR WORK DIRECTORY IN THE SCRIPT (path_to_workdir)"

puts "#!/bin/bash"

i=0

ffmpeg_cmd1 = "ffmpeg -i "

tc_in = Array.new

tc_out = Array.new

clip = Array.new

file = ARGV.first

ff = File.open(file, "r")

ff.each_line do |line|

clip << line.scan(/NAME:\s(.+)/)

tc_in << line.scan(/(\d\d:\d\d:\d\d:\d\d).\d\d:\d\d:\d\d:\d\d.\d\d:\d\d:\d\d:\d\d.\d\d:\d\d:\d\d:\d\d/)

tc_out << line.scan(/\s\s\s\d\d:\d\d:\d\d:\d\d\s(\d\d:\d\d:\d\d:\d\d)/)

end

c=0

clip.delete_at(0)

clip.each do |fuck|

if clip[c].empty?

tc_in[c] = []

tc_out[c] = []

end

c=c+1

end

total_frames = 0

tc_in = tc_in.reject(&:empty?)

tc_out = tc_out.reject(&:empty?)

clip = clip.reject(&:empty?)

tc_in.each do |f|

tt_in = String.new

tt_out = String.new

tt_in = tc_in[i].to_s.scan(/(\d\d)\D(\d\d)\D(\d\d)\D(\d\d)/)

tt_out = tc_out[i].to_s.scan(/(\d\d)\D(\d\d)\D(\d\d)\D(\d\d)/)

framecount = ((tt_out[0][0].to_i-tt_in[0][0].to_i)*60*60*60+(tt_out[0][1].to_i-tt_in[0][1].to_i)*60*60+(tt_out[0][2].to_i-tt_in[0][2].to_i)*60+(tt_out[0][3].to_i-tt_in[0][3].to_i))

framecount = framecount + 20

tt_in_ff = (tt_in[0][3].to_i*1000/60)

frames_in = tt_in[0][0].to_i*60*60*60+tt_in[0][1].to_i*60*60+tt_in[0][2].to_i*60+tt_in[0][3].to_i

frames_in = frames_in - 10

new_tt_in = Array.new

new_tt_in[0] = frames_in/60/60/60

frames_in = frames_in - new_tt_in[0]*60*60*60

new_tt_in[1] = frames_in/60/60

frames_in = frames_in - new_tt_in[1]*60*60

new_tt_in[2] = frames_in/60

frames_in = frames_in - new_tt_in[2]*60

new_tt_in[3] = frames_in

frames_left = (tt_in[0][0].to_i*60*60*60+(tt_in[0][1].to_i)*60*60+(tt_in[0][2].to_i)*60+(tt_in[0][3].to_i))-10

new_frames = Array.new

new_frames[0] = frames_left/60/60/60

frames_left = frames_left - new_frames[0]*60*60*60

new_frames[1] = frames_left/60/60

frames_left = frames_left - new_frames[1]*60*60

new_frames[2] = frames_left/60

frames_left = frames_left - new_frames[2]*60

new_frames[3] = frames_left

tt_in_ff_new = (new_frames[3]*1000/60)

clip[i][0][0] = clip[i][0][0].chomp("\r")

path_to_workdir = "'/Volumes/getztron2/April Fool 2016/V'"

mkdir = "mkdir #{i}\n"

puts mkdir

ff_cmd_new = "ffmpeg -ss #{sprintf '%02d', new_frames[0]}:#{sprintf '%02d', new_frames[1]}:#{sprintf '%02d', new_frames[2]}.#{sprintf '%02d', tt_in_ff_new} -i #{path_to_workdir}/#{clip[i][0][0].to_s} -frames:v #{framecount} -c:v copy p.MOV -y"

puts ff_cmd_new

puts "./render.sh p.MOV&&\n"

puts "mv frame*.DNG #{i}/"

hdmi4k_cmd = "hdmi4k #{path_to_workdir}/frame*[0-9].ppm --ufraw-gamma --soft-film=1.5 --fixrnt --offset=500&&\n"

ff_cmd2 = "ffmpeg -i #{path_to_workdir}/frame%04d-out.ppm -vcodec prores -profile:v 3 #{clip[i][0][0]}_#{i}_new.mov -y&&\n"

puts "\n\n\n"

i=i+1

total_frames = total_frames + framecount

end

puts "#Total frame: count: #{total_frames}"

Pipe it to a Bash file to have a shell script.

Note from the programmer: This is really unsophisticated and messy. Feel free to alter and share improvements.

8.3 Userspace

Arch Linux comes with systemd, which has one advantage that the boot process is incredible fast. Standard tools such as sshd and dhcpcd have been preinstalled. We may need other tools such as ftp, webserver, etc.

- ftp; I would suggest vsftpd here

- webserver; I am able to modify cherokee with custom C code to directly talk to specific camera sections. Cherokee already powers the WiFi module of the GoPro.

One idea to store camera relevant parameters inside the camera and provide access from most programming languages is to use a database like http://en.wikipedia.org/wiki/Berkeley_DB

8.3.1 Firmware Backup

The entire camera firmware is stored on a Micro SD card plugged into the Microzed. To back up the entire firmware we plug in the Micro SD card into a Linux PC and do the following:

1. Find out which device the micro SD card is:

cat /proc/partitions

2. Make sure the card is unmounted:

umount /dev/sdc

3. clone the card to a file:

dd if=/dev/sdc of=sdimage.img bs=4M

Here is a guide that covers doing the same on Mac and Windows: http://raspberrypi.stackexchange.com/questions/311/how-do-i-backup-my-raspberry-pi